Home » Health News »

Listening to asthma and COPD: An AI-powered wearable could monitor respiratory health

A neck patch that monitors respiratory sounds may help manage asthma and chronic obstructive pulmonary disease (COPD) by detecting symptom flareups in real time, without compromising patient privacy.

Asthma and COPD are two of the most common chronic respiratory diseases. In Europe, the combined prevalence is about 10 percent of the general population. In Canada, an estimated 3.8 million people experience asthma and two million people experience COPD.

The chronic nature of asthma and COPD requires continuous disease monitoring and management. Patients with these conditions share many similar clinical symptoms such as frequent coughing, wheezing and shortness of breath. These symptoms can worsen from time to time and situation to situation, such as exposure to smoke.

Even with optimal treatment, patients encounter unpredictable flareups or exacerbation of their conditions. These can become life-threatening and need immediate medical attention. Effective and predictive tools, which enable continuous remote monitoring and early detection of exacerbation, are crucial to prompt treatment and improved health.

An international collaboration between Canada and Germany with expertise in upper airway health, audio/acoustic engineering and wearable computing is developing a wearable device to monitor these respiratory symptoms.

Privacy concerns

Wearable technologies have been widely applied for remote monitoring of asthma and COPD. Most of these devices have built-in microphones to collect audible clinical symptoms, such as coughs, from patients. However, such designs hamper patients’ full compliance because of privacy concerns about continuous monitoring of all sounds in their daily life encounters and home environment.

Efficient and intelligent algorithms are required for health wearables to meaningfully interpret data as soon as it’s fed into the system. Recent advances in artificial intelligence (AI) have rapidly changed many fields of medical diagnosis and therapy monitoring.

However, the AI “black-box” problem also creates ethical and transparency concerns in biomedicine. Most AI tools only allow us to know the algorithm’s input and output (for example, turning an input X-ray image into a predicted diagnosis as output) but not the processes and workings in between. That means we don’t know how the AI tools do what they do.

Also, implementing real-time analytics in wearable devices is challenging due to constrained computational resources in these devices, but is essential for timely detection of airway symptoms. The development of trustworthy and cost-effective “wearable AI” is crucial to this project.

To address these unmet challenges, our AI-powered wearables will have the capacity to protect speech privacy and perform near-real-time data analysis to empower patients and clinicians to take informed actions without delay.

Listening with protected speech privacy

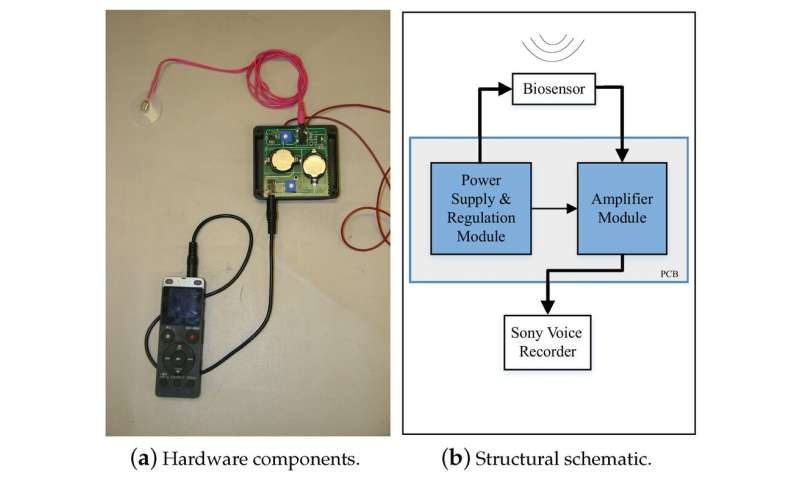

At McGill University, the Canadian team is developing a wearable device, similar in size to a Fitbit, to track and monitor the health status of the upper airway during daily activities. The device is based on mechano-acoustic sensing technology.

In a nutshell, a small, patch-like skin accelerometer is customized to be placed on the neck. When a person experiences upper airway symptoms such as cough, hoarse voice, etc., the characteristic body sounds of those symptoms create acoustic waves that spread across to the neck skin and turn into mechanical vibrations detectable by the skin accelerometer.

Most features of recognizable speech are within the high-frequency range (around six to eight kilohertz). Human neck tissues serve as a filter that only low-frequency components of a signal can pass through. That means identifiable speech information is detectable as sound by our sensors but inaudible by human ears, preserving users’ speech privacy.

We are now working to develop a smartphone application that will connect to the wearable device. This mobile app will generate a diary summary of upper airway health for patients. Also, with users’ consent, the report can also be sent to their primary healthcare providers for remote monitoring.

Small and intelligent AI

At Friedrich-Alexander-Universität Erlangen-Nürnberg, the German team has developed deep neural networks, a specific subfield of AI, that are very lean and only need very small computational memory of less than 150 kilobytes. Also, continuous monitoring generates a large and complex data source. In a recent publication, we reported that our algorithms are on par with state-of-the-art algorithms, even though they fit on a low-cost microcontroller.

Our current project will build upon these findings and expand these cost-effective AI algorithms to automate the analysis of mechanical acoustic signals. That information, together with other user-specific data (such as local air quality and reliever used), can be used to predict a patient’s risk of asthma/COPD symptom exacerbation.

At present, the device is at the testing stage. By looking at the magnitude and pattern of these neck surface vibration signals, our AI-based technology is currently capable of identifying symptoms related to airway health such as cough, throat clearing and hoarse voice with over 80 percent accuracy, which is important for accurately determining severity.

Early detection of asthma and COPD flareups remains an unmet clinical need, but this technology may be useful for other conditions, too. For example, we anticipate that this application can be extended to monitor “long COVID” because some of its symptoms—such as shortness of breath and coughing—overlap with those of asthma and COPD.

Source: Read Full Article